Traditionally, data centers have relied on air cooling — where mechanical chillers circulate chilled air to absorb heat from servers, helping them maintain optimal conditions. But as AI models increase in size, and the use of AI reasoning models rises, maintaining those optimal conditions is not only getting harder and more expensive — but more energy-intensive.

While data centers once operated at 20 kW per rack, today’s hyperscale facilities can support over 135 kW per rack, making it an order of magnitude harder to dissipate the heat generated by high-density racks. To keep AI servers running at peak performance, a new approach is needed for efficiency and scalability.

One key solution is liquid cooling — by reducing dependence on chillers and enabling more efficient heat rejection, liquid cooling is driving the next generation of high-performance, energy-efficient AI infrastructure.

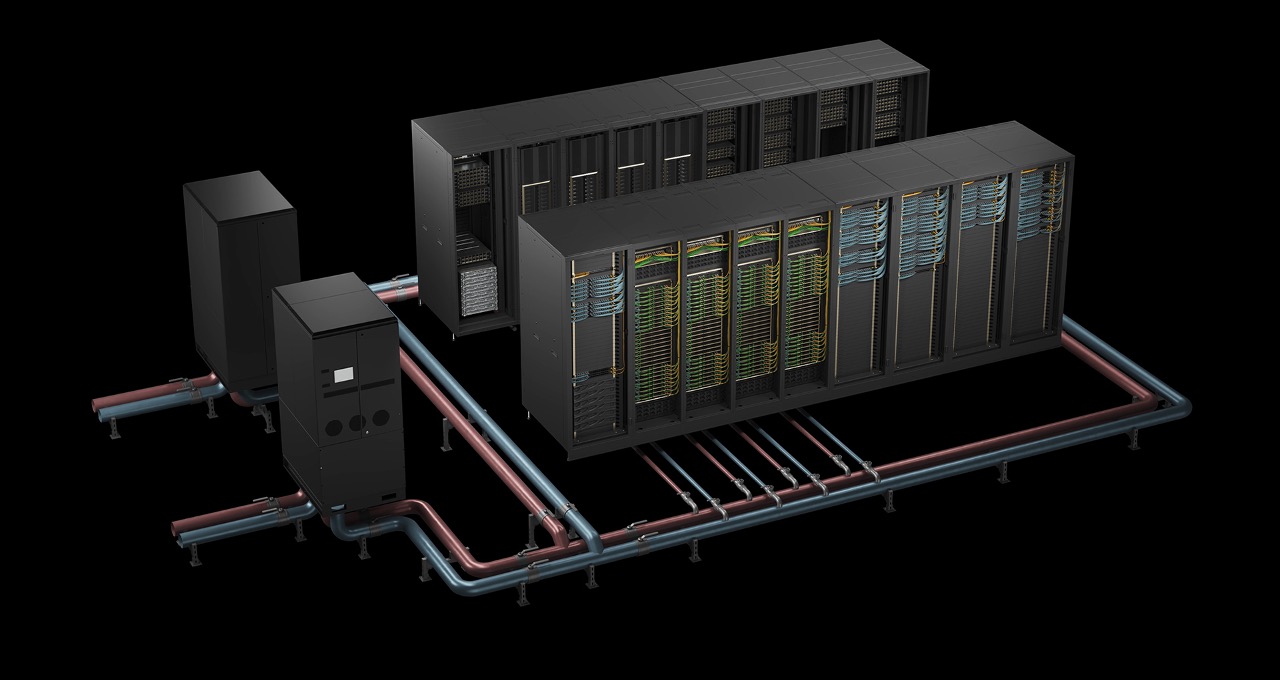

The NVIDIA GB200 NVL72 and the NVIDIA GB300 NVL72 are rack-scale, liquid-cooled systems designed to handle the demanding tasks of trillion-parameter large language model inference. Their architecture is also specifically optimized for test-time scaling accuracy and performance, making it an ideal choice for running AI reasoning models while efficiently managing energy costs and heat.

Driving Unprecedented Water Efficiency and Cost Savings in AI Data Centers

Historically, cooling alone has accounted for up to 40% of a data center’s electricity consumption, making it one of the most significant areas where efficiency improvements can drive down both operational expenses and energy demands.

Liquid cooling helps mitigate costs and energy use by capturing heat directly at the source. Instead of relying on air as an intermediary, direct-to-chip liquid cooling transfers heat in a technology cooling system loop. That heat is then cycled through a coolant distribution unit via liquid-to-liquid heat exchanger, and ultimately transferred to a facility cooling loop. Because of the higher efficiency of this heat transfer, data centers and AI factories can operate effectively with warmer water temperatures — reducing or eliminating the need for mechanical chillers in a wide range of climates.

The NVIDIA GB200 NVL72 rack-scale, liquid-cooled system, built on the NVIDIA Blackwell platform, offers exceptional performance while balancing energy costs and heat. It packs unprecedented compute density into each server rack, delivering 40x higher revenue potential, 30x higher throughput, 25x more energy efficiency and 300x more water efficiency than traditional air-cooled architectures. Newer NVIDIA GB300 NVL72 systems built on the Blackwell Ultra platform boast a 50x higher revenue potential and 35x higher throughput with 30x more energy efficiency.

Data centers spend an estimated $1.9-2.8M per megawatt (MW) per year, which amounts to nearly $500,000 spent annually on cooling-related energy and water costs. By deploying the liquid-cooled GB200 NVL72 system, hyperscale data centers and AI factories can achieve up to 25x cost savings, leading to over $4 million dollars in annual savings for a 50 MW hyperscale data center.

For data center and AI factory operators, this means lower operational costs, enhanced energy efficiency metrics and a future-proof infrastructure that scales AI workloads efficiently — without the unsustainable water footprint of legacy cooling methods.

Moving Heat Outside the Data Center

As compute density rises and AI workloads drive unprecedented thermal loads, data centers and AI factories must rethink how they remove heat from their infrastructure. The traditional methods of heat rejection that supported predictable CPU-based scaling are no longer sufficient on their own. Today, there are multiple options for moving heat outside the facility, but four major categories dominate current and emerging deployments.

Key Cooling Methods in a Changing Landscape

- Mechanical Chillers: Mechanical chillers use a vapor compression cycle to cool water, which is then circulated through the data center to absorb heat. These systems are typically air-cooled or water-cooled, with the latter often paired with cooling towers to reject heat. While chillers are reliable and effective across diverse climates, they are also highly energy-intensive. In AI-scale facilities where power consumption and sustainability are top priorities, reliance on chillers can significantly impact both operational costs and carbon footprint.

- Evaporative Cooling: Evaporative cooling uses the evaporation of water to absorb and remove heat. This can be achieved through direct or indirect systems, or hybrid designs. These systems are much more energy-efficient than chillers but come with high water consumption. In large facilities, they can consume millions of gallons of water per megawatt annually. Their performance is also climate-dependent, making them less effective in humid or water-restricted regions.

- Dry Coolers: Dry coolers remove heat by transferring it from a closed liquid loop to the ambient air using large finned coils, much like an automotive radiator. These systems don’t rely on water and are ideal for facilities aiming to reduce water usage or operate in dry climates. However, their effectiveness depends heavily on the temperature of the surrounding air. In warmer environments, they may struggle to keep up with high-density cooling demands unless paired with liquid-cooled IT systems that can tolerate higher operating temperatures.

- Pumped Refrigerant Systems: Pumped refrigerant systems use liquid refrigerants to move heat from the data center to outdoor heat exchangers. Unlike chillers, these systems don’t rely on large compressors inside the facility and they operate without the use of water. This method offers a thermodynamically efficient, compact and scalable solution that works especially well for edge deployments and water-constrained environments. Proper refrigerant handling and monitoring are required, but the benefits in power and water savings are significant.

Each of these methods offers different advantages depending on factors like climate, rack density, facility design and sustainability goals. As liquid cooling becomes more common and servers are designed to operate with warmer water, the door opens to more efficient and environmentally friendly cooling strategies — reducing both energy and water use while enabling higher compute performance.

Optimizing Data Centers for AI Infrastructure

As AI workloads grow exponentially, operators are reimagining data center design with infrastructure built specifically for high-performance AI and energy efficiency. Whether they’re transforming their entire setup into dedicated AI factories or upgrading modular components, optimizing inference performance is crucial for managing costs and operational efficiency.

To get the best performance, high compute capacity GPUs aren’t enough — they need to be able to communicate with each other at lightning speed.

NVIDIA NVLink boosts communication, enabling GPUs to operate as a massive, tightly integrated processing unit for maximum performance with a full-rack power density of 120 kW. This tight, high-speed communication is crucial for today’s AI tasks, where every second saved on transferring data can mean more tokens per second and more efficient AI models.

Traditional air cooling struggles at these power levels. To keep up, data center air would need to be either cooled to below-freezing temperatures or flow at near-gale speeds to carry the heat away, making it increasingly impractical to cool dense racks with air alone.

At nearly 1,000x the density of air, liquid cooling excels at carrying heat away thanks to its superior heat capacitance and thermal conductivity. By efficiently transferring heat away from high-performance GPUs, liquid cooling reduces reliance on energy-intensive and noisy cooling fans, allowing more power to be allocated to computation rather than cooling overhead.

Liquid Cooling in Action

Innovators across the industry are leveraging liquid cooling to slash energy costs, improve density and drive AI efficiency:

- Vertiv’s reference architecture for NVIDIA GB200 NVL72 servers reduces annual energy consumption by 25%, cuts rack space requirements by 75% and shrinks the power footprint by 30%.

- Schneider Electric’s liquid-cooling infrastructure supports up to 132 kW per rack, improving energy efficiency, scalability and overall performance for GB200 NVL72 AI data centers.

- CoolIT Systems’ high-density CHx2000 liquid-to-liquid coolant distribution units provide 2MW cooling capacity at 5°C approach temperature, ensuring reliable thermal management for GB300 NVL72 deployments. Also, CoolIT Systems’ OMNI All-Metal Coldplates with patented Split-Flow technology provide targeted cooling of over 4,000W thermal design power while reducing pressure drop.

- Boyd’s advanced liquid-cooling solutions, incorporating the company’s over two decades of high-performance compute industry experience, include coolant distribution units, liquid-cooling loops and cold plates to further maximize energy efficiency and system reliability for high-density AI workloads.

Cloud service providers are also adopting cutting-edge cooling and power innovations. Next-generation AWS data centers, featuring jointly developed liquid cooling solutions, increase compute power by 12% while reducing energy consumption by up to 46% — all while maintaining water efficiency.

Cooling the AI Infrastructure of the Future

As AI continues to push the limits of computational scale, innovations in cooling will be essential to meeting the thermal management challenges of the post-Moore’s law era.

NVIDIA is leading this transformation through initiatives like the COOLERCHIPS program, a U.S. Department of Energy-backed effort to develop modular data centers with next-generation cooling systems that are projected to reduce costs by at least 5% and improve efficiency by 20% over traditional air-cooled designs.

Looking ahead, data centers must evolve not only to support AI’s growing demands but do so sustainably — maximizing energy and water efficiency while minimizing environmental impact. By embracing high-density architectures and advanced liquid cooling, the industry is paving the way for a more efficient AI-powered future.

Learn more about breakthrough solutions for data center energy and water efficiency presented at NVIDIA GTC 2025 and discover how accelerated computing is driving a more efficient future with NVIDIA Blackwell.

Blog Article: Here