Robots are moving goods in warehouses, packaging foods and helping assemble vehicles — bringing enhanced automation to use cases across industries.

There are two keys to their success: Physical AI and robotics simulation.

Physical AI describes AI models that can understand and interact with the physical world. Physical AI embodies the next wave of autonomous machines and robots, such as self-driving cars, industrial manipulators, mobile robots, humanoids and even robot-run infrastructure like factories and warehouses.

With virtual commissioning of robots in digital worlds, robots are first trained using robotic simulation software before they are deployed for real-world use cases.

Robotics Simulation Summarized

An advanced robotics simulator facilitates robot learning and testing of virtual robots without requiring the physical robot. By applying physics principles and replicating real-world conditions, these simulators generate synthetic datasets to train machine learning models for deployment on physical robots.

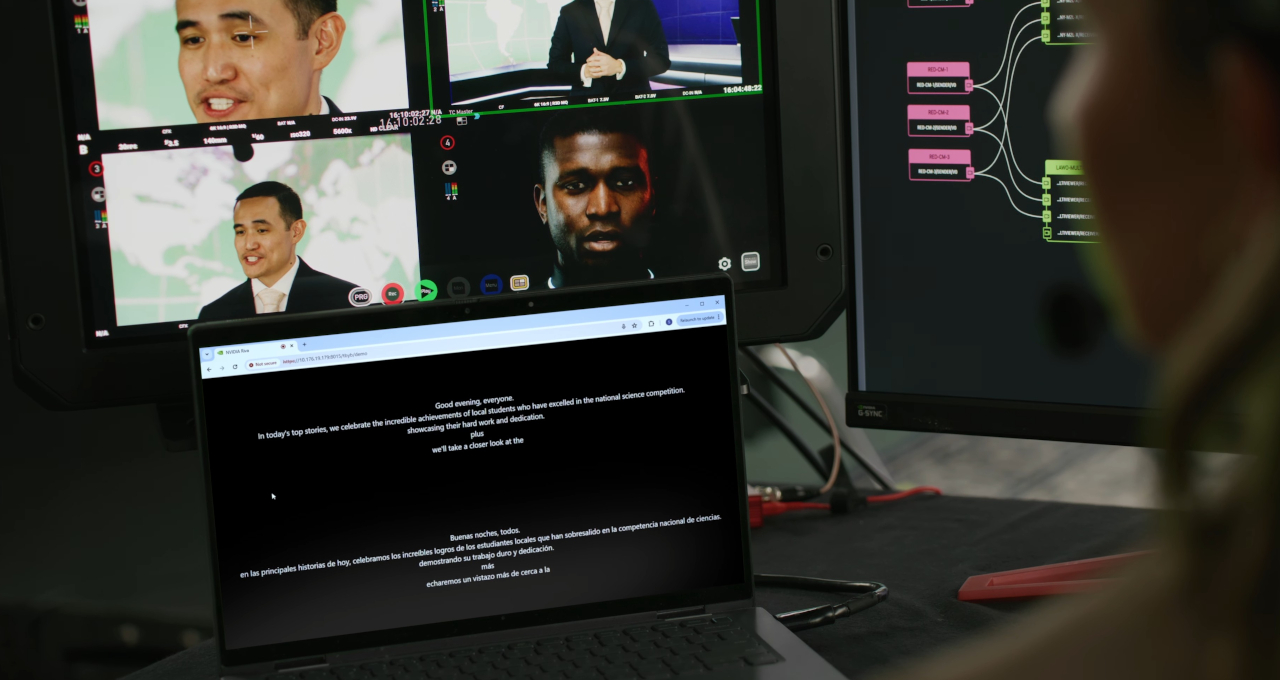

Simulations are used for initial AI model training and then to validate the entire software stack, minimizing the need for physical robots during testing. NVIDIA Isaac Sim, a reference application built on the NVIDIA Omniverse platform, provides accurate visualizations and supports Universal Scene Description (OpenUSD)-based workflows for advanced robot simulation and validation.

NVIDIA’s 3 Computer Framework Facilitates Robot Simulation

Three computers are needed to train and deploy robot technology.

- A supercomputer to train and fine-tune powerful foundation and generative AI models.

- A development platform for robotics simulation and testing.

- An onboard runtime computer to deploy trained models to physical robots.

Only after adequate training in simulated environments can physical robots be commissioned.

The NVIDIA DGX platform can serve as the first computing system to train models.

NVIDIA Ominverse running on NVIDIA OVX servers functions as the second computer system, providing the development platform and simulation environment for testing, optimizing and debugging physical AI.

NVIDIA Jetson Thor robotics computers designed for onboard computing serve as the third runtime computer.

Who Uses Robotics Simulation?

Today, robot technology and robot simulations boost operations massively across use cases.

Global leader in power and thermal technologies Delta Electronics uses simulation to test out its optical inspection algorithms to detect product defects on production lines.

Deep tech startup Wandelbots is building a custom simulator by integrating Isaac Sim into its application, making it easy for end users to program robotic work cells in simulation and seamlessly transfer models to a real robot.

Boston Dynamics is activating researchers and developers through its reinforcement learning researcher kit.

Robotics Company Fourier is simulating real-world conditions to train humanoid robots with the precision and agility needed for close robot-human collaboration.

Using NVIDIA Isaac Sim, robotics company Galbot built DexGraspNet, a comprehensive simulated dataset for dexterous robotic grasps containing over 1 million ShadowHand grasps on 5,300+ objects. The dataset can be applied to any dexterous robotic hand to accomplish complex tasks that require fine-motor skills.

Using Robotics Simulation for Planning and Control Outcomes

In complex and dymanic industrial settings, robotics simulation is evolving to integrate digital twins, enhancing planning, control and learning outcomes.

Developers import computer-aided design models into a robotics simulator to build virtual scenes and employ algorithms to create the robot operating system and enable task and motion planning. While traditional methods involve prescribing control signals, the shift toward machine learning allows robots to learn behaviors through methods like imitation and reinforcement learning, using simulated sensor signals.

This evolution continues with digital twins in complex facilities like manufacturing assembly lines, where developers can test and refine real-time AIs entirely in simulation. This approach saves software development time and costs, and reduces downtime by anticipating issues. For instance, using NVIDIA Omniverse, Metropolis and cuOpt, developers can use digital twins to develop, test and refine physical AI in simulation before deploying in industrial infrastructure.

High-Fidelity, Physics-Based Simulation Breakthroughs

High-fidelity, physics-based simulations have supercharged industrial robotics through real-world experimentation in virtual environments.

NVIDIA PhysX, integrated into Omniverse and Isaac Sim, empowers roboticists to develop fine- and gross-motor skills for robot manipulators, rigid and soft body dynamics, vehicle dynamics and other critical features that ensure the robot obeys the laws of physics. This includes precise control over actuators and modeling of kinematics, which are essential for accurate robot movements.

To close the sim-to-real gap, Isaac Lab offers a high-fidelity, open-source framework for reinforcement learning and imitation learning that facilitates seamless policy transfer from simulated environments to physical robots. With GPU parallelization, Isaac Lab accelerates training and improves performance, making complex tasks more achievable and safe for industrial robots.

To learn more about creating a locomotion reinforcement learning policy with Isaac Sim and Isaac Lab, read this developer blog.

Teaching Collision-Free Motion for Autonomy

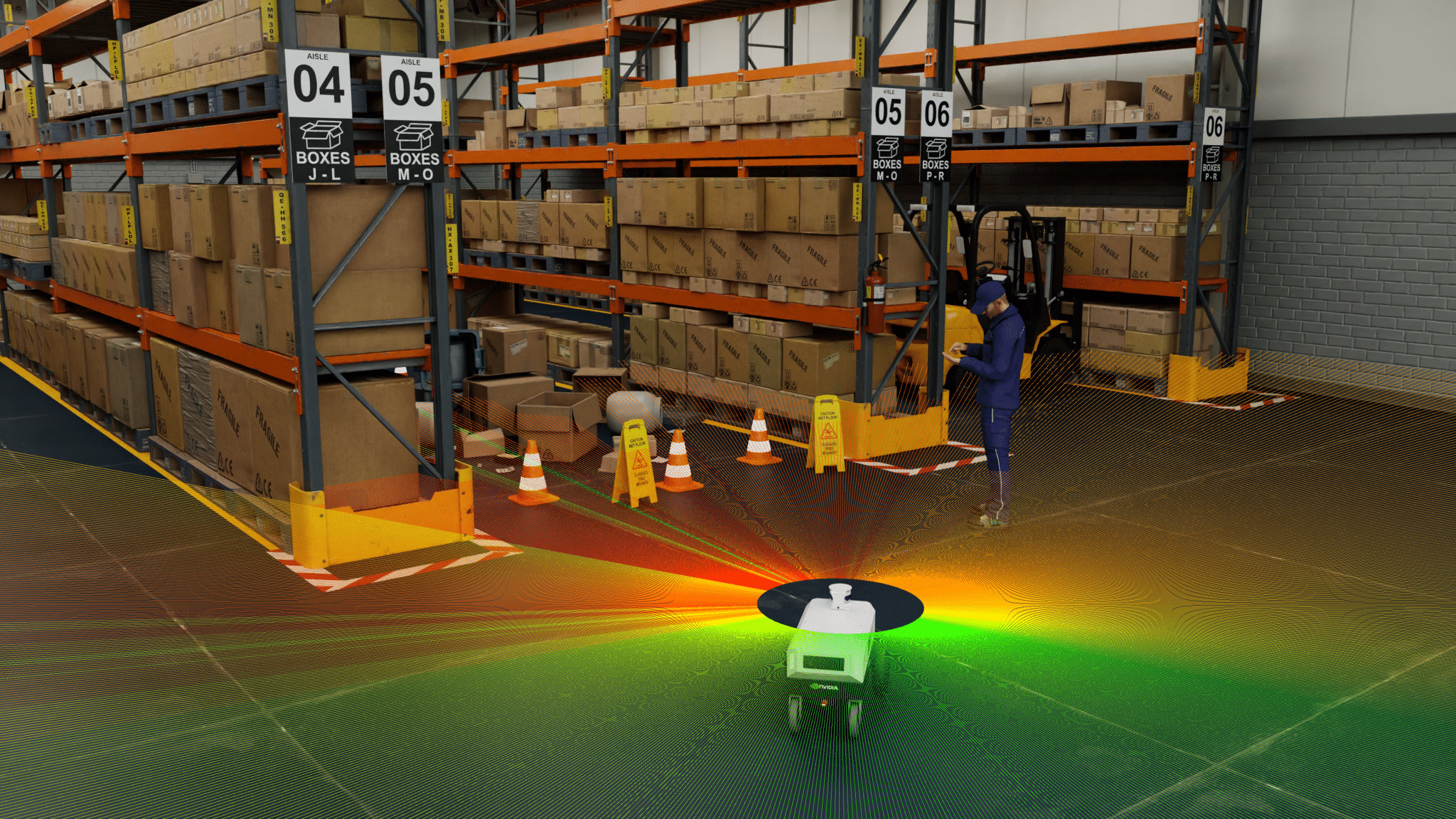

Industrial robot training often occurs in specific settings like factories or fulfillment centers, where simulations help address challenges related to various robot types and chaotic environments. A critical aspect of these simulations is generating collision-free motion in unknown, cluttered environments.

Traditional motion planning approaches that attempt to address these challenges can come up short in unknown or dynamic environments. SLAM, or simultaneous localization and mapping, can be used to generate 3D maps of environments with camera images from multiple viewpoints. However, these maps require revisions when objects move and environments are changed.

The NVIDIA Robotics research team and the University of Washington introduced Motion Policy Networks (MπNets), an end-to-end neural policy that generates real-time, collision-free motion using a single fixed camera’s data stream. Trained on over 3 million motion planning problems and 700 million simulated point clouds, MπNets navigates unknown real-world environments effectively.

While the MπNets model applies direct learning for trajectories, the team also developed a point cloud-based collision model called CabiNet, trained on over 650,000 procedurally generated simulated scenes.

With the CabiNet model, developers can deploy general-purpose, pick-and-place policies of unknown objects beyond a flat tabletop setup. Training with a large synthetic dataset allowed the model to generalize to out-of-distribution scenes in a real kitchen environment, without needing any real data.

How Developers Can Get Started Building Robotic Simulators

Get started with technical resources, reference applications and other solutions for developing physically accurate simulation pipelines by visiting the NVIDIA Robotics simulation use case page.

Robot developers can tap into NVIDIA Isaac Sim, which supports multiple robot training techniques:

- Synthetic data generation for training perception AI models

- Software-in-the-loop testing for the entire robot stack

- Robot policy training with Isaac Lab

Developers can also pair ROS 2 with Isaac Sim to train, simulate and validate their robot systems. The Isaac Sim to ROS 2 workflow is similar to workflows executed with other robot simulators such as Gazebo. It starts with bringing a robot model into a prebuilt Isaac Sim environment, adding sensors to the robot, and then connecting the relevant components to the ROS 2 action graph and simulating the robot by controlling it through ROS 2 packages.

Stay up to date by subscribing to our newsletter and follow NVIDIA Robotics on LinkedIn, Instagram, X and Facebook.

Blog Article: Here