Cloud-native technologies have become crucial for developers to create and implement scalable applications in dynamic cloud environments.

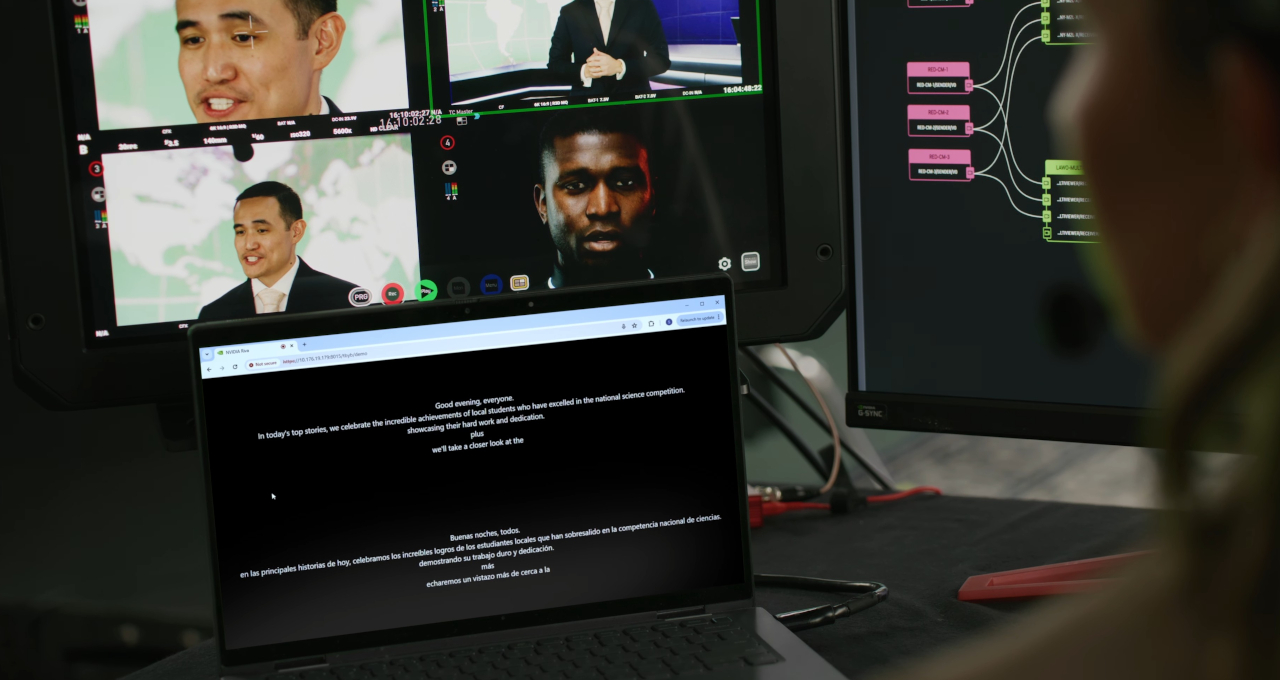

This week at KubeCon + CloudNativeCon North America 2024, one of the most-attended conferences focused on open-source technologies, Chris Lamb, vice president of computing software platforms at NVIDIA, delivered a keynote outlining the benefits of open source for developers and enterprises alike — and NVIDIA offered nearly 20 interactive sessions with engineers and experts.

The Cloud Native Computing Foundation (CNCF), part of the Linux Foundation and host of KubeCon, is at the forefront of championing a robust ecosystem to foster collaboration among industry leaders, developers and end users.

As a member of CNCF since 2018, NVIDIA is working across the developer community to contribute to and sustain cloud-native open-source projects. Our open-source software and more than 750 NVIDIA-led open-source projects help democratize access to tools that accelerate AI development and innovation.

Empowering Cloud-Native Ecosystems

NVIDIA has benefited from the many open-source projects under CNCF and has made contributions to dozens of them over the past decade. These actions help developers as they build applications and microservice architectures aligned with managing AI and machine learning workloads.

Kubernetes, the cornerstone of cloud-native computing, is undergoing a transformation to meet the challenges of AI and machine learning workloads. As organizations increasingly adopt large language models and other AI technologies, robust infrastructure becomes paramount.

NVIDIA has been working closely with the Kubernetes community to address these challenges. This includes:

- Work on dynamic resource allocation (DRA) that allows for more flexible and nuanced resource management. This is crucial for AI workloads, which often require specialized hardware. NVIDIA engineers played a key role in designing and implementing this feature.

- Leading efforts in KubeVirt, an open-source project extending Kubernetes to manage virtual machines alongside containers. This provides a unified, cloud-native approach to managing hybrid infrastructure.

- Development of NVIDIA GPU Operator, which automates the lifecycle management of NVIDIA GPUs in Kubernetes clusters. This software simplifies the deployment and configuration of GPU drivers, runtime and monitoring tools, allowing organizations to focus on building AI applications rather than managing infrastructure.

The company’s open-source efforts extend beyond Kubernetes to other CNCF projects:

- NVIDIA is a key contributor to Kubeflow, a comprehensive toolkit that makes it easier for data scientists and engineers to build and manage ML systems on Kubernetes. Kubeflow reduces the complexity of infrastructure management and allows users to focus on developing and improving ML models.

- NVIDIA has contributed to the development of CNAO, which manages the lifecycle of host networks in Kubernetes clusters.

- NVIDIA has also added to Node Health Check, which provides virtual machine high availability.

And NVIDIA has assisted with projects that address the observability, performance and other critical areas of cloud-native computing, such as:

- Prometheus: Enhancing monitoring and alerting capabilities

- Envoy: Improving distributed proxy performance

- OpenTelemetry: Advancing observability in complex, distributed systems

- Argo: Facilitating Kubernetes-native workflows and application management

Community Engagement

NVIDIA engages the cloud-native ecosystem by participating in CNCF events and activities, including:

- Collaboration with cloud service providers to help them onboard new workloads.

- Participation in CNCF’s special interest groups and working groups on AI discussions.

- Participation in industry events such as KubeCon + CloudNativeCon, where it shares insights on GPU acceleration for AI workloads.

- Work with CNCF-adjacent projects in the Linux Foundation as well as many partners.

This translates into extended benefits for developers, such as improved efficiency in managing AI and ML workloads; enhanced scalability and performance of cloud-native applications; better resource utilization, which can lead to cost savings; and simplified deployment and management of complex AI infrastructures.

As AI and machine learning continue to transform industries, NVIDIA is helping advance cloud-native technologies to support compute-intensive workloads. This includes facilitating the migration of legacy applications and supporting the development of new ones.

These contributions to the open-source community help developers harness the full potential of AI technologies and strengthen Kubernetes and other CNCF projects as the tools of choice for AI compute workloads.

Check out NVIDIA’s keynote at KubeCon + CloudNativeCon North America 2024 delivered by Chris Lamb, where he discusses the importance of CNCF projects in building and delivering AI in the cloud and NVIDIA’s contributions to the community to push the AI revolution forward.

Blog Article: Here