Speed Demon: NVIDIA Blackwell Takes Pole Position in Latest MLPerf Inference Results

In the latest MLPerf Inference V5.0 benchmarks, which reflect some of the most challenging inference scenarios, the NVIDIA Blackwell platform set records — and marked NVIDIA’s first MLPerf submission using the NVIDIA GB200 NVL72 system, a rack-scale solution designed for AI reasoning. Delivering on the promise of cutting-edge AI takes a new kind of compute

Read Article

AI Factories Are Redefining Data Centers and Enabling the Next Era of AI

AI is fueling a new industrial revolution — one driven by AI factories. Unlike traditional data centers, AI factories do more than store and process data — they manufacture intelligence at scale, transforming raw data into real-time insights. For enterprises and countries around the world, this means dramatically faster time to value — turning AI

Read Article

NVIDIA Blackwell Now Generally Available in the Cloud

AI reasoning models and agents are set to transform industries, but delivering their full potential at scale requires massive compute and optimized software. The “reasoning” process involves multiple models, generating many additional tokens, and demands infrastructure with a combination of high-speed communication, memory and compute to ensure real-time, high-quality results. To meet this demand, CoreWeave

Read Article

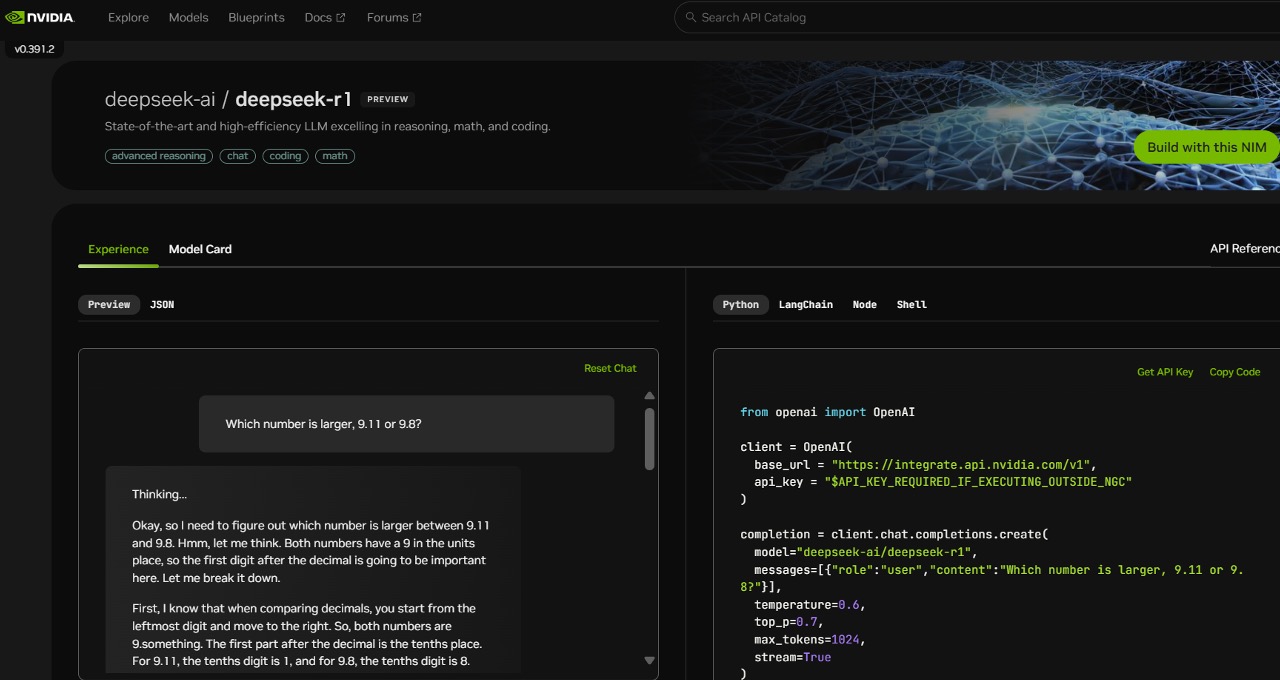

DeepSeek-R1 Now Live With NVIDIA NIM

DeepSeek-R1 is an open model with state-of-the-art reasoning capabilities. Instead of offering direct responses, reasoning models like DeepSeek-R1 perform multiple inference passes over a query, conducting chain-of-thought, consensus and search methods to generate the best answer. Performing this sequence of inference passes — using reason to arrive at the best answer — is known as

Read Article

2025 Predictions: Enterprises, Researchers and Startups Home In on Humanoids, AI Agents as Generative AI Crosses the Chasm

From boardroom to break room, generative AI took this year by storm, stirring discussion across industries about how to best harness the technology to enhance innovation and creativity, improve customer service, transform product development and even boost communication. The adoption of generative AI and large language models is rippling through nearly every industry, as incumbents

Read Article

Hopper Scales New Heights, Accelerating AI and HPC Applications for Mainstream Enterprise Servers

Since its introduction, the NVIDIA Hopper architecture has transformed the AI and high-performance computing (HPC) landscape, helping enterprises, researchers and developers tackle the world’s most complex challenges with higher performance and greater energy efficiency. During the Supercomputing 2024 conference, NVIDIA announced the availability of the NVIDIA H200 NVL PCIe GPU — the latest addition to

Read Article

What’s the ROI? Getting the Most Out of LLM Inference

Large language models and the applications they power enable unprecedented opportunities for organizations to get deeper insights from their data reservoirs and to build entirely new classes of applications. But with opportunities often come challenges. Both on premises and in the cloud, applications that are expected to run in real time place significant demands on

Read Article

NVIDIA and Oracle to Accelerate AI and Data Processing for Enterprises

Enterprises are looking for increasingly powerful compute to support their AI workloads and accelerate data processing. The efficiency gained can translate to better returns for their investments in AI training and fine-tuning, and improved user experiences for AI inference. At the Oracle CloudWorld conference today, Oracle Cloud Infrastructure (OCI) announced the first zettascale OCI Supercluster,

Read Article

NVIDIA Blackwell Sets New Standard for Generative AI in MLPerf Inference Debut

As enterprises race to adopt generative AI and bring new services to market, the demands on data center infrastructure have never been greater. Training large language models is one challenge, but delivering LLM-powered real-time services is another. In the latest round of MLPerf industry benchmarks, Inference v4.1, NVIDIA platforms delivered leading performance across all data

Read Article

NVIDIA to Present Innovations at Hot Chips That Boost Data Center Performance and Energy Efficiency

A deep technology conference for processor and system architects from industry and academia has become a key forum for the trillion-dollar data center computing market. At Hot Chips 2024 next week, senior NVIDIA engineers will present the latest advancements powering the NVIDIA Blackwell platform, plus research on liquid cooling for data centers and AI agents

Read Article

National Robotics Week — Latest Physical AI Research, Breakthroughs and Resources

Celebrating Microsoft’s 50 years